Nolan WagenerI am a Senior Software Engineer at Overland AI, working on advancing off-road autonomy. I received my PhD in Robotics at the Georgia Institute of Technology, where I was advised by Byron Boots (UW) and Panagiotis Tsiotras. I am interested in machine learning for robotics, particularly to increase the capabilities of robots. I am fortunate to have been funded by the NSF Graduate Research Fellowship and received and been nominated for several "best paper" awards at robotics conferences. I have also interned at Microsoft Research in the reinforcement learning group. Email / CV / GitHub / Google Scholar / LinkedIn |

|

Research |

|

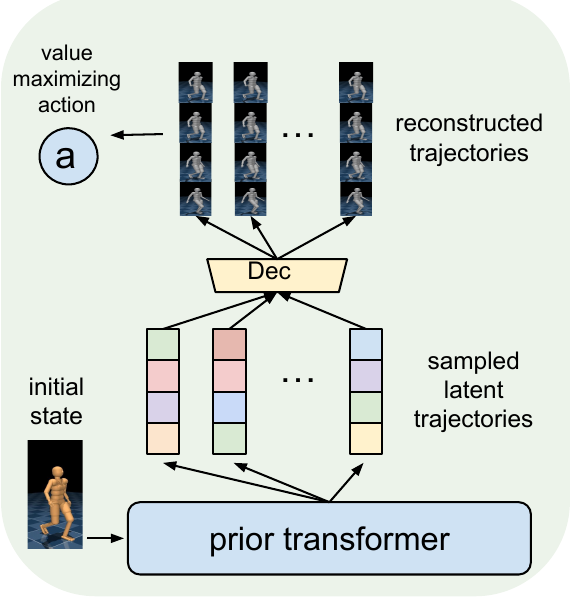

H-GAP: Humanoid Control with a Generalist PlannerZhengyao Jiang*, Yingchen Xu*, Nolan Wagener, Yicheng Luo, Michael Janner, Edward Grefenstette, Tim Rocktäschel, Yuandong Tian International Conference on Learning Representations (ICLR), 2024 Spotlight Presentation Paper / Website / Code / Poster A single humanoid policy trained on MoCapAct that can be used for many downstream tasks. |

|

Machine Learning for Agile Robotic ControlNolan Wagener Georgia Institute of Technology, 2023 Thesis / Defense My PhD thesis, covering several different ways that machine learning can be utilized for robotic control. |

|

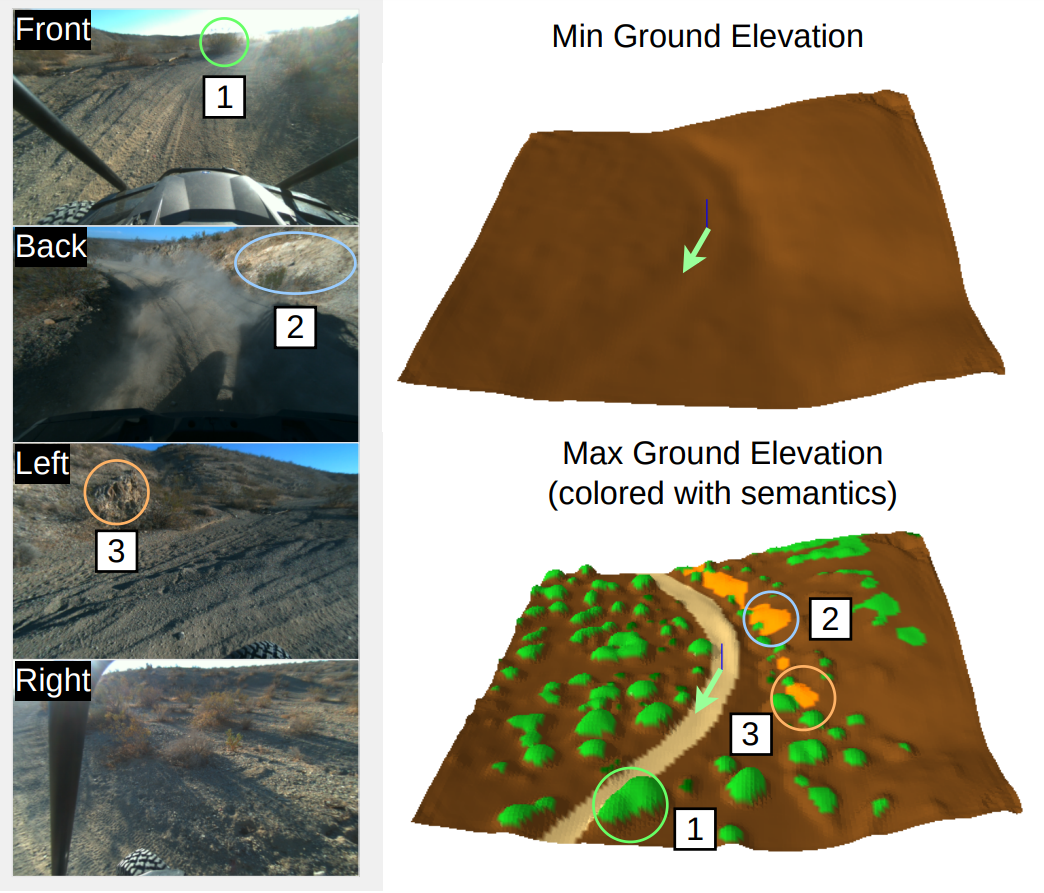

TerrainNet: Visual Modeling of Complex Terrain for High-Speed, Off-Road NavigationXiangyun Meng, Nathan Hatch, Alexander Lambert, Anqi Li, Nolan Wagener, Matthew Schmittle, JoonHo Lee, Wentao Yuan, Zoey Chen, Samuel Deng, Greg Okopal, Dieter Fox, Byron Boots, Amirreza Shaban Robotics: Science and Systems (RSS), 2023 Paper / Website / Talk / Poster / Slides A vision-based system for off-road driving that can predict semantic and geometric terrain information from stereo camera input. |

|

MoCapAct: A Multi-Task Dataset for Simulated Humanoid ControlNolan Wagener, Andrey Kolobov, Felipe Vieira Frujeri, Ricky Loynd, Ching-An Cheng, Matthew Hausknecht Neural Information Processing Systems (NeurIPS), 2022 Paper / Website / Blog / Talk / Short Talk / Code / Dataset / Poster / Slides We release a dataset of high-quality experts and their rollouts for tracking 3.5 hours of MoCap data in dm_control. We use this dataset to train policies that can track the entire dataset, efficiently transfer to other tasks, and perform physics-based motion completion. |

|

DARPA Robotic Autonomy in Complex Environments with Resiliency (RACER)University of Washington, 2021 Website / Article / Video High-speed off-road autonomy in complex terrain. I led development of a control stack that enables a Polaris RZR vehicle to traverse a wide variety of unstructured environments and terrains. |

|

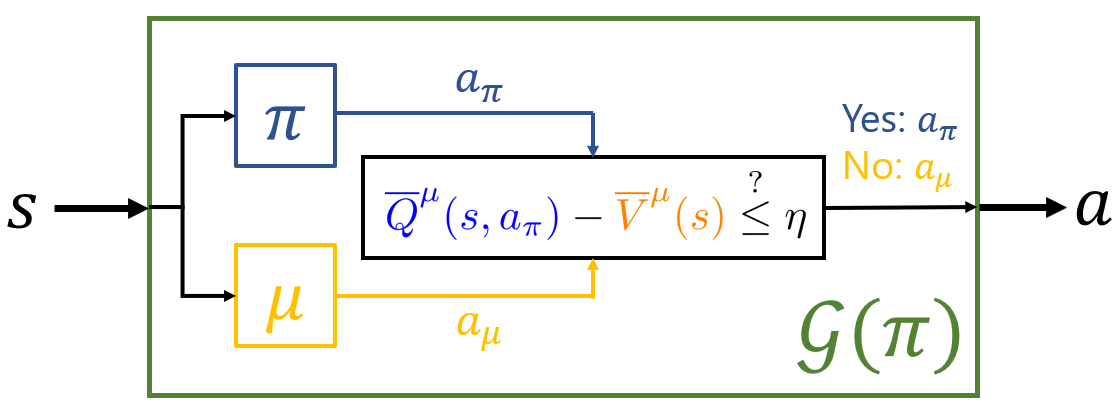

Safe Reinforcement Learning Using Advantage-Based InterventionNolan Wagener, Byron Boots, Ching-An Cheng International Conference on Machine Learning (ICML), 2021 Paper / Talk / Code / Poster / Slides An intervention-based technique for safe reinforcement learning which is based on an advantage function estimate with respect to a given baseline policy. Our work comes with strong theoretical guarantees on performance after training and safety both during and after training, which we corroborate with simulated experiments. |

|

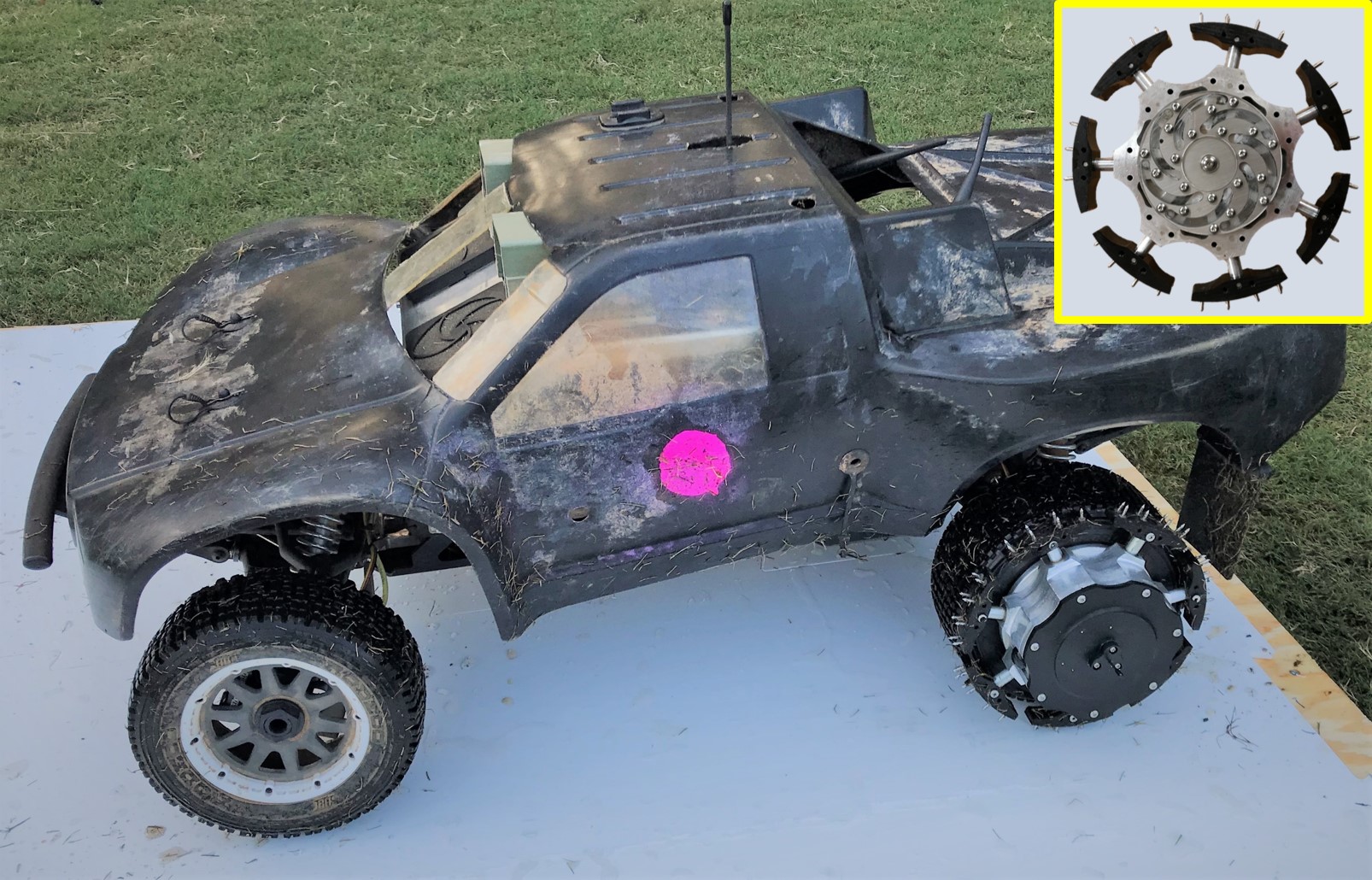

Exploiting Singular Configurations for Controllable, Low-Power Friction Enhancement on Unmanned Ground VehiclesAdam Foris, Nolan Wagener, Byron Boots, Anirban Mazumdar IEEE Robotics and Automation Letters (RA-L), 2020 Paper / Video / Talk A low-power wheel attachment that greatly increases traction on low-friction surfaces like synthetic ice. |

|

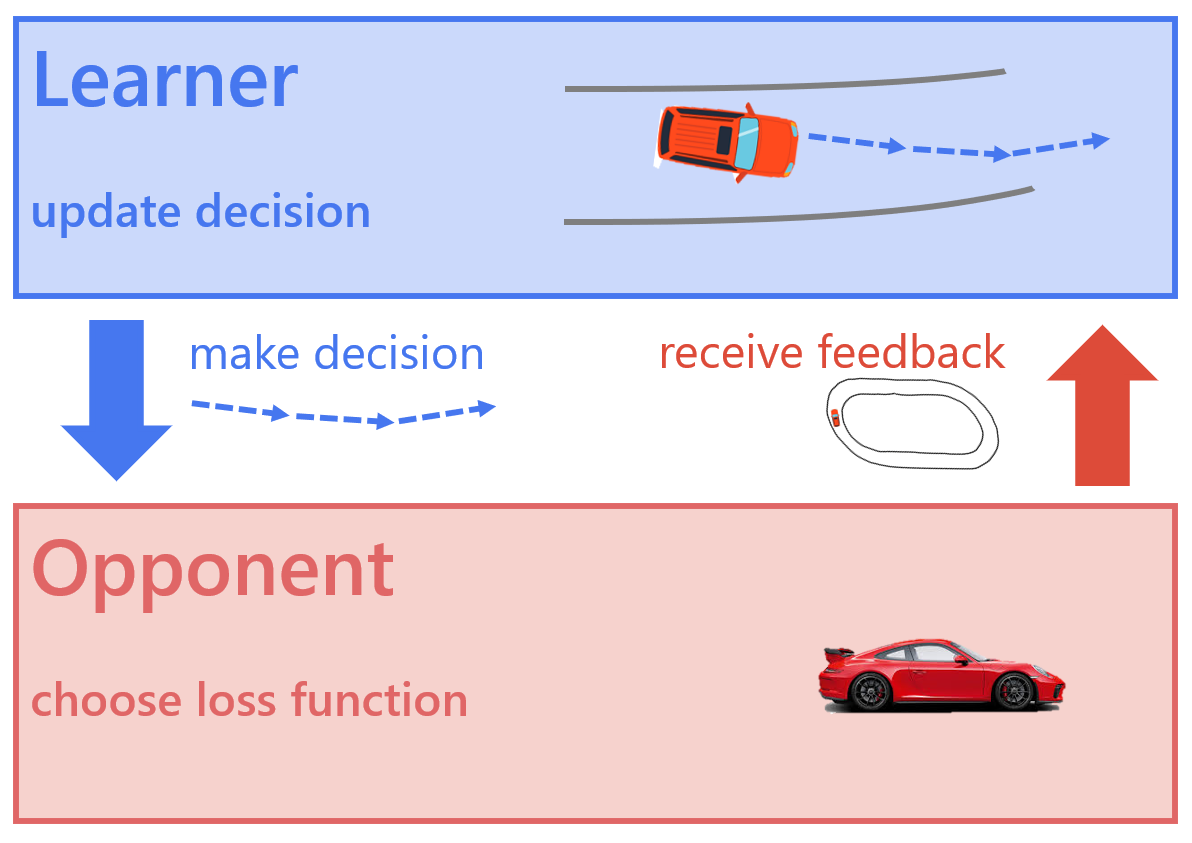

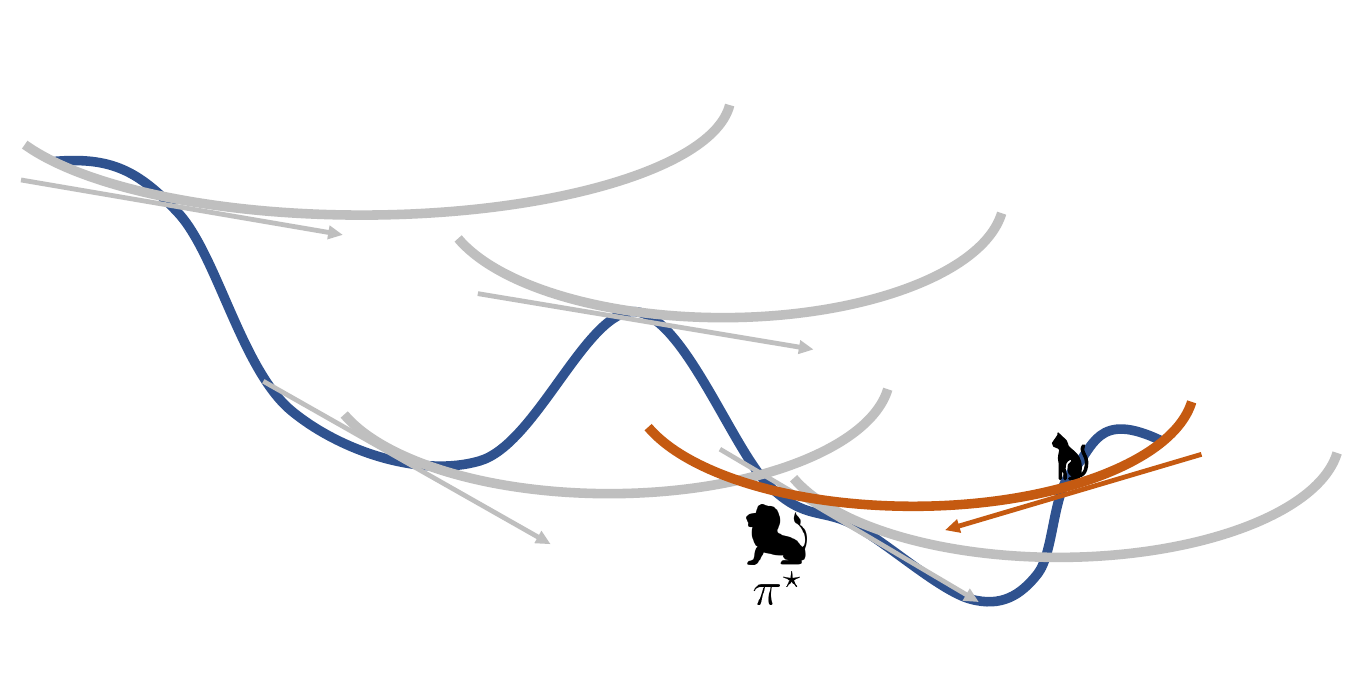

An Online Learning Approach to Model Predictive ControlNolan Wagener*, Ching-An Cheng*, Jacob Sacks, Byron Boots Robotics: Science and Systems (RSS), 2019 Winner of Best Student Paper Award Finalist for Best Systems Paper Award Paper / Video / Talk / Poster / Slides A connection between model predictive control (MPC) and online learning, demonstrating that many well-known MPC algorithms are special cases of dynamic mirror descent. |

|

Fast Policy Learning Through Imitation and ReinforcementChing-An Cheng, Xinyan Yan, Nolan Wagener, Byron Boots Uncertainty in Artificial Intelligence (UAI), 2018 Plenary Presentation Paper / Talk / Poster A simple yet effective policy optimization algorithm that first performs imitation learning and then switches to reinforcement learning. Theory and experiments show that the policy learns nearly as fast as if performing reinforcement learning starting from the expert that we’re imitating. |

|

Information Theoretic MPC for Model-Based Reinforcement LearningGrady Williams, Nolan Wagener, Brian Goldfain, Paul Drews, James Rehg, Byron Boots, Evangelos Theodorou IEEE International Conference on Robotics and Automation (ICRA), 2017 Finalist for Best Conference Paper Award Paper / Video / Code / Colab / Poster / Slides MPPI, a sampling-based model predictive control algorithm, which can handle general nonlinear dynamics and discontinuous costs. We demonstrate MPPI’s capability on an aggressive driving task. |

|

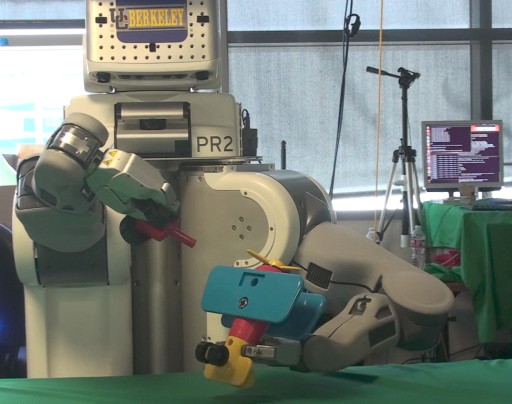

Learning Contact-Rich Manipulation Skills with Guided Policy SearchSergey Levine, Nolan Wagener, Pieter Abbeel IEEE International Conference on Robotics and Automation (ICRA), 2015 Winner of Best Robotic Manipulation Paper Award Paper / Article / Video / Talk An application of guided policy search, a reinforcement learning algorithm which alternates between trajectory optimization and supervised learning, that allows a PR2 robot to efficiently learn manipulation skills. |

|

Design and source code from Leonid Keselman's website, which itself is based on Jon Barron's website. |